User Documentation

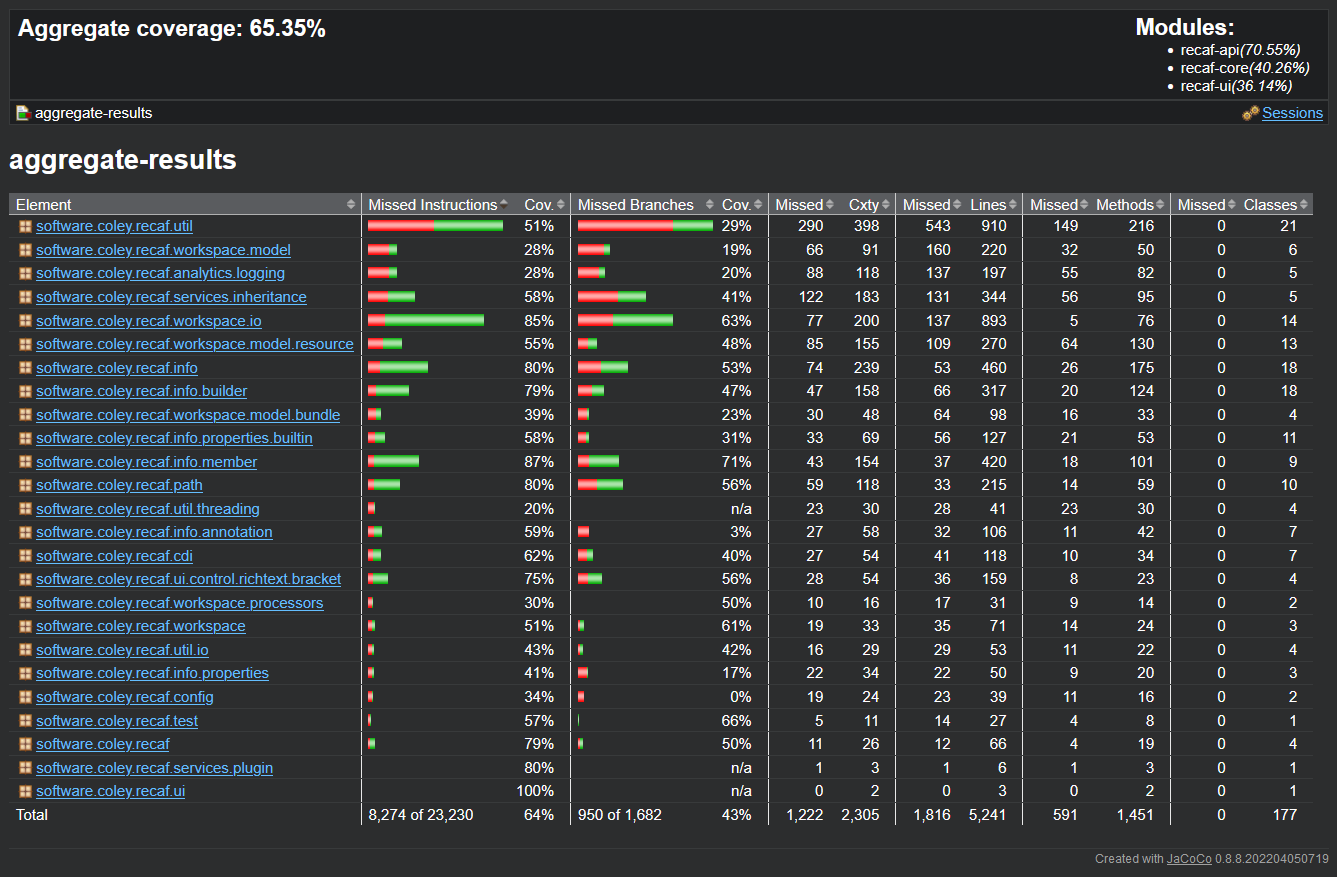

Recaf is a modern Java and Android reverse engineering tool. This entails supporting many tasks such as providing an intuitive UI for navigating application logic, simplifying the process of modifying application behavior, and much more. You can read more about all of the other features provided by Recaf by reading the documentation.

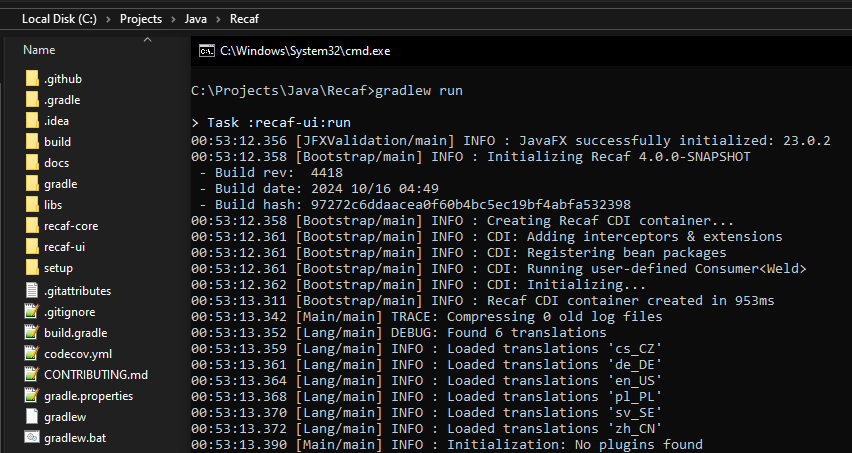

Installing

For most users it is recommended that you use the launcher to install and run Recaf. The launcher will automatically download the correct JavaFX artifacts for your system, allow you to pick which installed version of Java to use when running Recaf, and provide easy single-click access to updates.

For technical users that do not wish to use the launcher, you can follow the manual installation guide.

Requirements

Java 22 or higher

Recaf uses features introduced in JDK 22, thus you will need to have JDK 22 or above installed.

You can install JDK 22 or above from a number of OpenJDK distributors:

JavaFX 22 or higher

Recaf uses features introduced in JavaFX 22, thus you will need to have JavaFX 22 or above downloaded. When you use the launcher to run Recaf the latest compatible version of JavaFX will be automatically downloaded for you.

Installation steps will be provided in the following launcher and manual pages.

Installing via the launcher

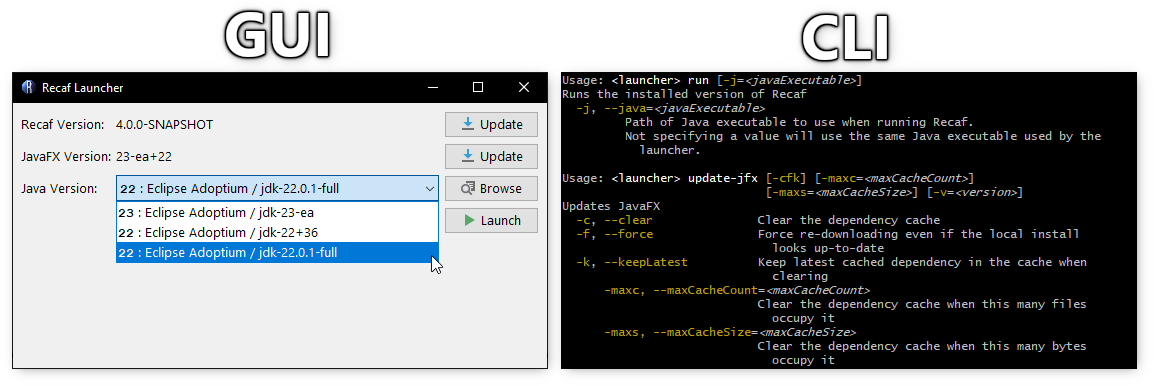

The latest version of the launcher can be downloaded on the project's releases page. Each release offers both a GUI and CLI.

Screenshot of the launcher GUI and CLI in use side-by-side.

Using the GUI

Run the jar file to open the GUI. The first time using the launcher, you will be asked how this launcher should behave.

Welcome screen shown in the launcher the first time you use it.

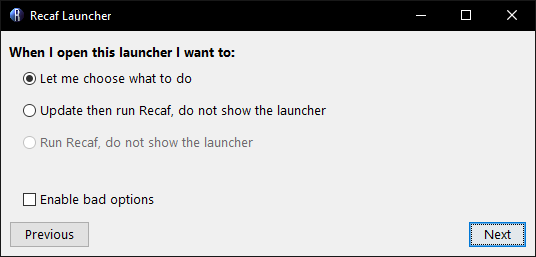

You have the options of:

- Showing the launcher every time

- Updating and running Recaf (without showing the launcher)

- Just running Recaf without updating (and without showing the launcher)

Prompt asking how the launcher should behave on following uses.

If you wish to reset the behavior after picking one of the last two options, delete the %RECAF%/launcher directory where %RECAF% is where Recaf is installed on your system. This location can be different depending on what operating system you use.

| OS | Location |

|---|---|

| Windows | %APPDATA%/Recaf |

| Mac | $HOME/Library/Application Support/Recaf |

| Linux | $XDG_CONFIG_HOME/Recaf or $HOME/.config/Recaf |

If you wish to have Recaf installed in a different location set the RECAF environment variable to the desired directory path.

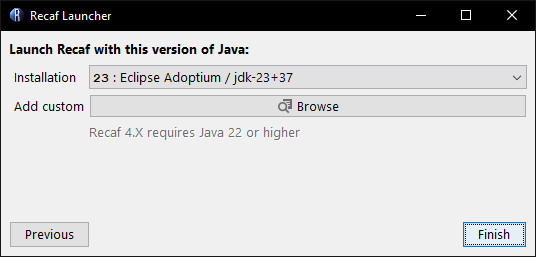

Prompt asking for a Java installation to use Recaf with.

The launcher itself can run with Java 8 or above. It will ask you to pick a version of Java to use for running Recaf that meets the requirements. The launcher will attempt to find existing Java installations on your machine and pre-populate the dropdown menu. If you want to use a different version or the launcher couldn't find where you installed Java you can click the "browser" button to manually pick an installation.

Using the CLI

The following is the list of CLI commands.

Auto

- Checks local system compatibility

- Keeps JavaFX up-to-date

- Keeps Recaf up-to-date

- Runs Recaf

Usage: <launcher> auto

Run

- Runs the currently installed version of Recaf

Usage: <launcher> run

-j, --java=<javaExecutable>

Path of Java executable to use when running Recaf.

Not specifying a value will use the same Java executable used by the

launcher.

Compatibility

- Checks for a compatible version of Java

- Checks if the current Java runtime includes JavaFX

- Bundling JavaFX can work, but its your responsibility to ensure the bundled version is compatible with Recaf

- Ideally use a JDK that does not bundle JavaFX and let the launcher pull in JavaFX

Usage: <launcher> compatibility [-ifx] [-ss]

-ifx, --ignoreBundledFx

Ignore problems with the local system's bundled JavaFX version

-ss, --skipSuggestions

Skip solutions to detected problems

Update Recaf

- Keeps Recaf up-to-date

Usage: <launcher> update

If you want to be on the bleeding edge of things there is an alternative command:

Usage: <launcher> update-ci [-b=<branch>]

Installs the latest artifact from CI

-b, --branch=<branch> Branch name to pull from.

By default, no branch is used.

Whatever is found first on the CI will be grabbed.

Update JavaFX

- Keeps Recaf's local JavaFX cache up-to-date with the current release of JavaFX

- Can be configured to use specific versions of JavaFX if desired

- Can be configured to delete old versions of JavaFX in the cache automatically

Usage: <launcher> update-jfx [-cfk] [-maxc=<maxCacheCount>]

[-maxs=<maxCacheSize>] [-v=<version>]

-c, --clear Clear the dependency cache

-f, --force Force re-downloading even if the local install

looks up-to-date

-k, --keepLatest Keep latest cached dependency in the cache when

clearing

-maxc, --maxCacheCount=<maxCacheCount>

Clear the dependency cache when this many files

occupy it

-maxs, --maxCacheSize=<maxCacheSize>

Clear the dependency cache when this many bytes

occupy it

-v, --version=<version> Target JavaFX version to use, instead of whatever

is the latest

Check Recaf's version

- Prints out the version of Recaf installed via the launcher

Usage: <launcher> version

Installing manually

To install and launch Recaf 4.X without the launcher here's the process to follow:

Step 1: Download Java 22 or higher

You can get Java 22+ from a variety of vendors. We have a list of recommended vendors in the previous requirements page.

Step 2: Download Recaf

You can grab the official releases from the GitHub releases. You will want to pick the larger JAR file with the -generic.jar suffix as this is the jar file that bundles all transitive dependencies (Except JavaFX since it is platform specific).

NOTE: Once Recaf 4 begins proper releases the statement above will be accurate. But for the time being Recaf 4 is only publishing snapshots under CI.

If you want to try out features and fixes before they get bundled into a release you can also check the CI for nightly artifacts. You will need to be signed into GitHub to access the artifact downloads though.

For the sake of simplicity the file downloaded in this step will be referred to as recaf.jar.

Step 3: Download JavaFX

You will need to download the four JavaFX artifacts suited for your operating system.

How do I get the artifact for my system?

- Pick a version from the displayed table.

- This will take you to a page describing information about that specific version of the dependency.

- The oldest version we would recommend is

22or any of its patch version updates (Like22.0.1). - The newest version you can choose depends on your version of Java installed. JavaFX occasionally updates what version of Java it targets.

- JFX 21 requires Java 17+

- JFX 23 requires Java 21+

- JFX 24 requires Java 22+

- You can check the JFX release notes to see whenever they bump the minimum target JDK version.

- There will be a row labeled

Files. SelectView All.- This will show you the list of each platform-specific release for the given version.

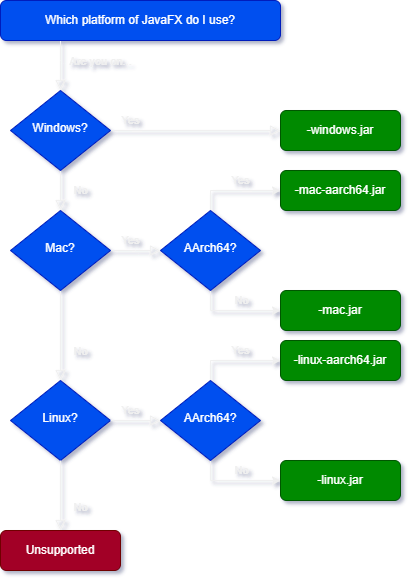

- Pick the appropriate platform for your operating system. You can find a flow-chart helper below.

- Choose

javafx-<name>-<version>-<platform>-<arch>.jar.- This should be one of the larger file variants in the list.

- Do this for each of the dependencies in the list above.

- Put all four artifacts in a directory next to

recaf.jar.- In this example I will name the directory

dependencies.

- In this example I will name the directory

Platform flow-chart:

Flow chart/decision tree showing which JFX platform you should pick for your system.

Step 4: Run Recaf with JavaFX in the classpath

Run the command java -cp recaf.jar;dependencies/* software.coley.recaf.Main.

NOTE: On Unix systems you would use

:instead of;as the dependency path separator.

Additional arguments for Recaf are documented in the developer "Running" page.

Why have an external launcher?

There are two main reasons:

- Remove self-dependency injection of JavaFX in the Recaf app itself.

- In the older iterations of Recaf we would do everything this launcher does in the background. Managing the JavaFX dependency externally ensures that we don't have to do anything nasty or hacky.

- Remove auto-updater behavior from the Recaf app itself.

- Recaf can still tell you that there is an update available, but it won't attempt to automate the process by itself.

- This is more friendly towards environments like Brew/AUR which manage the app (vs the app managing itself).

How was this handled in the past?

If you ran Recaf 2.X with JavaFX on the classpath, nothing special happens. However, if you did not have JavaFX on the classpath...

- Recaf would check for the latest version of JavaFX and then download the artifacts deemed to be the closest fit for your operating system.

- Recaf would then hijack the module system and disable reflection access restrictions

- Recaf can now use unrestricted reflection to grab an instance of the internal application classloader and inject JavaFX into it

- Because the internals moved around a bit between versions, there's some conditional logic to tweak how this is done before and after certain releases

- This loader has a private field

ucpwhich is a wrapper holding qList<URL>pointing to all classpath entries - Normally this list will only hold the standard path + anything given via

-cpbut we want to add JavaFX - Doing

List.addby itself does not notify the runtime of any changes, but thankfully they expose anaddUrl(URL)method- We call that for each of the JavaFX artifacts we downloaded earlier

- If nothing has thrown an exception so far, JavaFX should now magically load when we first reference it later on during execution

But now with a launcher I have an extra layer between me and Recaf

Both the GUI and CLI launchers allow you to immediately run Recaf, making the launcher effectively invisible.

The GUI asks you how it should behave when you first use it. The first option, which is also selected by default, will show the launcher every time you run it. The second option though will attempt to update Recaf and then run it without ever showing the launcher. The third option immediately runs Recaf without ever updating. These last two options result in the launcher immediately opening Recaf, unless there is an update available if you selected the second option.

The CLI launcher offers set-default-action as a command. You can configure the launcher to do auto if you want to always update then run Recaf, or run if you want to run Recaf without ever updating. Once the value is set any use of java -jar launcher.jar will immediately run the specified action (and in turn, open Recaf).

But I really don't want to use a launcher...

Totally understandable, please refer to the prior page "manual installation" on how to grab Recaf for strictly offline usage. Additionally, Recaf is a regular Java project so its relatively easy to clone and build the project from source too.

The launcher exists as a middle ground between the average "end user" and what I will refer to as "power users". Average end users generally don't care how something opens unless it is annoying. The launcher may be a level of indirection, but it provides single click updates without them having to remember to open a web browser, download a new version of Recaf and update their local copy. That sounds simple but most of the time they don't bother to do that, so making it easy to update is ideal (Users can report a bug, and then click it once their ticket / message is addressed). Most of the users of Recaf from my observation fall into this category.

Then there are the "power users". These are generally highly technical users that have use an OS like Arch Linux and desire a very specific setup for their installed software. If your software doesn't align with their desired setup, they either wont use your software or will post a wall of text explaining how it doesn't align with Unix/FOSS princples. Different power users also have different desired setups which. For these users its best to hand them the tools they need and tell them to figure it out themselves, because if you try and figure it out for them, you will do it wrong. Something all power users universally hate though is auto-updating software. That used to be a feature of Recaf where if you would run it, it was capable of updating itself. That is no longer the case and now updating is a task relegated to the launcher.

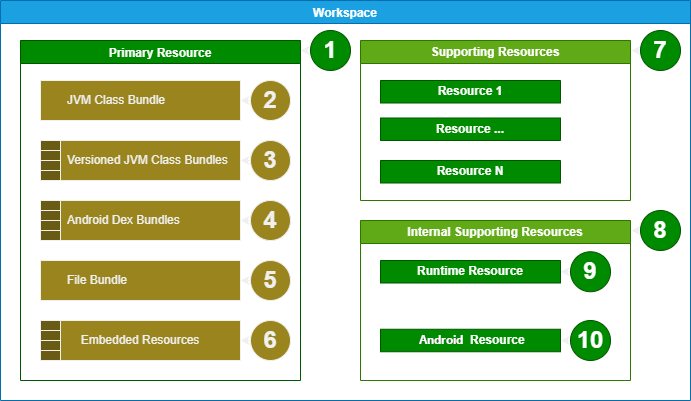

Topical Information about the JVM

The following pages in this section give an overview about how the JVM operates. Not enough to bore you, but hopefully give you enough basic information about how things work to better understand the content you will be working with inside of Recaf.

JVM Bytecode Instructions

Preface

This is a listing of the Java bytecode instructions grouped roughly into use cases. For instance, ifeq and goto belong to the Control Flow group.

What differentiates this list from the official instruction specification is that the descriptions have been modified to fit how Recaf represents them in the assembler.

Table of Contents

- Constants

- Object Creation

- Arrays

- Variables

- Stack Math

- Stack Manipulation

- Control Flow

- Fields

- Method Calls

- Dynamic Method Calls

- Type Conversion

- Returns

- Miscellaneous

Constants

| Opcode | Stack: [before]→[after] | Description |

|---|---|---|

aconst_null | → null | push a null reference onto the stack |

dconst_0 | → 0.0 | push the constant 0.0 onto the stack |

dconst_1 | → 1.0 | push the constant 1.0 onto the stack |

fconst_0 | → 0.0f | push 0.0f on the stack |

fconst_1 | → 1.0f | push 1.0f on the stack |

fconst_2 | → 2.0f | push 2.0f on the stack |

iconst_m1 | → -1 | load the int value −1 onto the stack |

iconst_0 | → 0 | load the int value 0 onto the stack |

iconst_1 | → 1 | load the int value 1 onto the stack |

iconst_2 | → 2 | load the int value 2 onto the stack |

iconst_3 | → 3 | load the int value 3 onto the stack |

iconst_4 | → 4 | load the int value 4 onto the stack |

iconst_5 | → 5 | load the int value 5 onto the stack |

lconst_0 | → 0L | push long 0L onto the stack |

lconst_1 | → 1L | push long 1L onto the stack |

ldc

| → value | push a constant value (String, int, float, long, double, Class, MethodType, MethodHandle, or ConstantDynamic) onto the stack |

bipush

| → value | push a byte (-128 through 127) onto the stack as an int value |

sipush

| → value | push a short (-32768 through 32767) onto the stack as an int value |

Object Creation

| Opcode | Stack: [before]→[after] | Description |

|---|---|---|

new

| → objectref | create new object of type |

NOTE: After creation of a new object, its constructor must properly be invoked to initialize the object. You will generally see the pattern:

// Given the code: // String myString = new String(byteArrayHere); new java/lang/String dup aload byteArrayHere invokespecial java/lang/String.<init> ([B)V astore myString

- The

newcreates the initial instance.- The

dupduplicates the reference to the instance on the stack.- The

byte[]parameter to theString(byte[])constructor is loaded on the stack.- The

String(byte[])constructor is invoked, consuming thebyte[]paramter and the duplicatedStringreference off of the stack.- The originally pushed

Stringreference is stored in a variable.

- Since the items from

dupare the same instance this stores theStringvalue after its constructor is called and initialization occurrs.

Arrays

| Opcode | Stack: [before]→[after] | Description | ||||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

anewarray

| count → arrayref | create a new array of references of length count and component type identified by type (A full class name such as java/lang/String) | ||||||||||||||||||

newarray

| count → arrayref | create a new array of primitives of length count and component type identified by type

| ||||||||||||||||||

multianewarray

| count1, [count2,...] → arrayref | create a new array of dims dimensions of type identified by desc | ||||||||||||||||||

arraylength | arrayref → length | get the length of an array | ||||||||||||||||||

aaload | arrayref, index → value | load a reference from an array | ||||||||||||||||||

aastore | arrayref, index, value → | store a reference into an array | ||||||||||||||||||

baload | arrayref, index → value | load a byte or boolean from an array | ||||||||||||||||||

bastore | arrayref, index, value → | store a byte or boolean into an array | ||||||||||||||||||

caload | arrayref, index → value | load a char from an array | ||||||||||||||||||

castore | arrayref, index, value → | store a char into an array | ||||||||||||||||||

daload | arrayref, index → value | load a double from an array | ||||||||||||||||||

dastore | arrayref, index, value → | store a double into an array | ||||||||||||||||||

faload | arrayref, index → value | load a float from an array | ||||||||||||||||||

fastore | arrayref, index, value → | store a float in an array | ||||||||||||||||||

iaload | arrayref, index → value | load an int from an array | ||||||||||||||||||

iastore | arrayref, index, value → | store an int into an array | ||||||||||||||||||

laload | arrayref, index → value | load a long from an array | ||||||||||||||||||

lastore | arrayref, index, value → | store a long to an array | ||||||||||||||||||

saload | arrayref, index → value | load short from an array | ||||||||||||||||||

sastore | arrayref, index, value → | store short to array |

Variables

| Opcode | Stack: [before]→[after] | Description |

|---|---|---|

iload

| → value | load an int value from a local variable var |

lload

| → value | load a long value from a local variable var |

fload

| → value | load a float value from a local variable var |

dload

| → value | load a double value from a local variable var |

aload

| → objectref | load a reference onto the stack from a local variable var |

istore

| value → | store int value into variable var |

lstore

| value → | store a long value in a local variable var |

fstore

| value → | store a float value into a local variable var |

dstore

| value → | store a double value into a local variable var |

astore

| objectref → | store a reference into a local variable var |

iinc

| [No change] | increment local variable var by a given amount (byte) |

NOTE: Variables are accessed by index in the class file specification, but Recaf's assembler maps variables to their name as found in the

LocalVariableTable(LVT) attribute. If a method does not have a LVT, or if the LVT contains bogus names that are not valid for use within the assembler, then auto-generated names will be used following the patternv1,v2...vN.

Stack Math

| Opcode | Stack: [before]→[after] | Description | ||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

dadd | value1, value2 → result | add two double values value2 + value1 | ||||||||||

ddiv | value1, value2 → result | divide two double values value2 / value1 | ||||||||||

dmul | value1, value2 → result | multiply two double values value2 * value1 | ||||||||||

drem | value1, value2 → result | get the remainder from a division between two double values (value2 - ((value1 / value2) * value2)) | ||||||||||

dsub | value1, value2 → result | subtract a double from another value2 - value1 | ||||||||||

fadd | value1, value2 → result | add two float values value2 + value1 | ||||||||||

fdiv | value1, value2 → result | divide two float values value2 / value1 | ||||||||||

fmul | value1, value2 → result | multiply two float values value2 * value1 | ||||||||||

frem | value1, value2 → result | get the remainder from a division between two float values (value2 - ((value1 / value2) * value2)) | ||||||||||

fsub | value1, value2 → result | subtract two float values value2 - value1 | ||||||||||

iadd | value1, value2 → result | add two int values value2 + value1 | ||||||||||

idiv | value1, value2 → result | divide two int values value2 / value1 | ||||||||||

imul | value1, value2 → result | multiply two int values value2 * value1 | ||||||||||

irem | value1, value2 → result | logical int remainder (value2 - ((value1 / value2) * value2)) | ||||||||||

isub | value1, value2 → result | int subtract value2 - value1 | ||||||||||

iand | value1, value2 → result | perform a bitwise AND on two int values value2 & value1 | ||||||||||

ior | value1, value2 → result | bitwise int OR `value2 | ||||||||||

ixor | value1, value2 → result | int xor value2 ^ value1 | ||||||||||

ishl | value1, value2 → result | int shift left value2 << value1 | ||||||||||

ishr | value1, value2 → result | int arithmetic shift right value2 >> value1 | ||||||||||

iushr | value1, value2 → result | int logical shift right value2 >>> value1 | ||||||||||

ladd | value1, value2 → result | add two long values value2 + value1 | ||||||||||

ldiv | value1, value2 → result | divide two long values value2 / value1 | ||||||||||

lmul | value1, value2 → result | multiply two long values value2 * value1 | ||||||||||

lrem | value1, value2 → result | remainder of division of two long values (value2 - ((value1 / value2) * value2)) | ||||||||||

lsub | value1, value2 → result | subtract two long values value2 - value1 | ||||||||||

lshl | value1, value2 → result | bitwise shift left of a long value1 by int value2 positions value2 << value1 | ||||||||||

lshr | value1, value2 → result | bitwise shift right of a long value1 by int value2 positions value2 >> value1 | ||||||||||

lushr | value1, value2 → result | bitwise shift right of a long value1 by int value2 positions, unsigned value2 >>> value1 | ||||||||||

land | value1, value2 → result | bitwise AND of two long values value2 ^ value1 | ||||||||||

lor | value1, value2 → result | bitwise OR of two long values `value2 | ||||||||||

lxor | value1, value2 → result | bitwise XOR of two long values value2 ^ value1 | ||||||||||

dneg | value → result | negate a double -value | ||||||||||

fneg | value → result | negate a float -value | ||||||||||

ineg | value → result | negate int -value | ||||||||||

lneg | value → result | negate a long -value | ||||||||||

dcmpg | value1, value2 → result | compare two double values

| ||||||||||

dcmpl | value1, value2 → result | compare two double values

| ||||||||||

fcmpg | value1, value2 → result | compare two float values

| ||||||||||

fcmpl | value1, value2 → result | compare two float values

| ||||||||||

lcmp | value1, value2 → result | compare two long values

|

Stack Manipulation

| Opcode | Stack: [before]→[after] | Description |

|---|---|---|

dup | value → value, value | duplicate the value on top of the stack |

dup_x1 | value2, value1 → value1, value2, value1 | insert a copy of the top value into the stack two values from the top. value1 and value2 must not be of the type double or long. |

dup_x2 | value3, value2, value1 → value1, value3, value2, value1 | insert a copy of the top value into the stack two (if value2 is double or long it takes up the entry of value3, too) or three values (if value2 is neither double nor long) from the top |

dup2 | {value2, value1} → {value2, value1}, {value2, value1} | duplicate top two stack words (two values, if value1 is not double nor long; a single value, if value1 is double or long) |

dup2_x1 | value3, {value2, value1} → {value2, value1}, value3, {value2, value1} | duplicate two words and insert beneath third word (see explanation above) |

dup2_x2 | {value4, value3}, {value2, value1} → {value2, value1}, {value4, value3}, {value2, value1} | duplicate two words and insert beneath fourth word |

pop | value → | discard the top value on the stack |

pop2 | {value2, value1} → | discard the top two values on the stack (or one value, if it is a double or long) |

swap | value2, value1 → value1, value2 | swaps two top words on the stack (note that value1 and value2 must not be double or long) |

Control Flow

| Opcode | Stack: [before]→[after] | Description |

|---|---|---|

goto

| [no change] | jump to label |

if_acmpeq

| value1, value2 → | if references value1 == value2, jump to label |

if_acmpne

| value1, value2 → | if references value1 != value2, jump to label |

if_icmpeq

| value1, value2 → | if ints value1 == value2, jump to label |

if_icmpge

| value1, value2 → | if ints value1 >= value2, jump to label |

if_icmpgt

| value1, value2 → | if ints value1 > value2, jump to label |

if_icmple

| value1, value2 → | if ints value1 <= value2, jump to label |

if_icmplt

| value1, value2 → | if ints value1 < value2, jump to label |

if_icmpne

| value1, value2 → | if ints value1 != value2, jump to label |

ifeq

| value → | if value == 0, jump to label |

ifge

| value → | if value >= 0, jump to label |

ifgt

| value → | if value > 0, jump to label |

ifle

| value → | if value <= 0, jump to label |

iflt

| value → | if value < 0, jump to label |

ifne

| value → | if value != 0, jump to label |

ifnonnull

| value → | if value != null, jump to label |

ifnull

| value → | if value == null, jump to label |

lookupswitch

| key → | jump to the label associated with the given key, or if no such entry in the table exists jump to the default label |

tableswitch

| index → | jump to the label associated with cases[min-index], or if the computed index of min-index is out of bounds jump to the default label |

athrow | objectref → [empty], objectref | throws an error or exception (notice that the rest of the stack is cleared, leaving only a reference to the Throwable) |

jsr ✝

| value → address | jump to label while also pushing the current code address to the stack (Generally this is immediately stored in a variable at the destination) |

ret ✝

| [no change] | jump to the code offset stored in the variable var |

NOTE:

jsrandretare deprecated instructions and are only present in classes from Java 7 or earlier. They cannot be used in Java 8 or above.

Fields

| Opcode | Stack: [before]→[after] | Description |

|---|---|---|

getfield

| objectref → value | get an instance field defined by the owner class and the field's name and desc |

getstatic

| → value | get a static field defined by the owner class and the field's name and desc |

putfield

| objectref, value → | set an instance field defined by the owner class and the field's name and desc |

putstatic

| value → | set a static field defined by the owner class and the field's name and desc |

Method Calls

| Opcode | Stack: [before]→[after] | Description |

|---|---|---|

invokeinterface

| objectref, [arg1, arg2, ...] → result | invokes an interface method defined by the owner class and the method's name and desc on object objectref and puts the result on the stack (might be void) |

invokespecial

| objectref, [arg1, arg2, ...] → result | invokes an instance method defined by the owner class and the method's name and desc on object objectref and puts the result on the stack (might be void) |

invokestatic

| [arg1, arg2, ...] → result | invokes a static method defined by the owner class and the method's name and desc and puts the result on the stack (might be void) |

invokevirtual

| objectref, [arg1, arg2, ...] → result | invokes a virtual method defined by the owner class and the method's name and desc on object objectref and puts the result on the stack (might be void) |

invokespecialinterface ✝

| objectref, [arg1, arg2, ...] → result | invokes an instance method defined by the owner class and the method's name and desc on object objectref and puts the result on the stack (might be void) |

invokestaticinterface ✝

| [arg1, arg2, ...] → result | invokes a static method defined by the owner class and the method's name and desc and puts the result on the stack (might be void) |

invokevirtualinterface ✝

| objectref, [arg1, arg2, ...] → result | invokes a virtual method defined by the owner class and the method's name and desc on object objectref and puts the result on the stack (might be void) |

NOTE: The ✝ instructions in the table above are abstractions in the Recaf assembler. These

invoke_x_interfaceare variants of their respectiveinvoke_xinstructions with theitfflag set totrue. This is normally not something you will encounter in properly compiled Java applications but has been found in some improperly processed classes in the wild (In particular the forge modding environment).

Dynamic Methods Calls

| Opcode | Stack: [before]→[after] | Description |

|---|---|---|

invokedynamic

| [arg1, arg2 ...] → result | invokes a dynamic method and puts the result on the stack (might be void). The callsite handles the dynamic invocation. |

NOTE: Before Java 9 - JEP 280

Stringconcatenation ("a" + "b") usedStringBuilderto append multiple values together. Afterwards, concatenation was reimplemented to useinvokedynamic. This instruction is the backbone of a lot of useful language features not only in just Java, but also other JVM languages. Decompilers will often understand the specific usages for cases found in the standard Java language, but will often fail for third party languages or within auto-generated / obfuscated code.

Type Conversion

| Opcode | Stack: [before]→[after] | Description |

|---|---|---|

d2f | value → result | convert: double to float |

d2i | value → result | convert: double to int |

d2l | value → result | convert: double to long |

f2d | value → result | convert: float to double |

f2i | value → result | convert: float to int |

f2l | value → result | convert: float to long |

i2b | value → result | convert: int to byte |

i2c | value → result | convert: int to char |

i2d | value → result | convert: int to double |

i2f | value → result | convert: int to float |

i2l | value → result | convert: int to long |

i2s | value → result | convert: int to short |

l2d | value → result | convert: long to double |

l2f | value → result | convert: long to float |

l2i | value → result | convert: long to int |

checkcast

| objectref → objectref | checks whether an objectref is of a certain specified type |

instanceof

| objectref → result | determines if an object objectref is of a given type |

An alternative view for the primitive-to-primitive conversions:

| From → To | int | long | float | double | byte | char | short |

|---|---|---|---|---|---|---|---|

| int | = | i2l | i2f | i2d | i2b | i2c | i2s |

| long | l2i | = | l2f | l2d | ❌ | ❌ | ❌ |

| float | f2i | f2l | = | f2d | ❌ | ❌ | ❌ |

| double | d2i | d2l | d2f | = | ❌ | ❌ | ❌ |

Returns

| Opcode | Stack: [before]→[after] | Description |

|---|---|---|

areturn | objectref → [empty] | return a reference |

dreturn | value → [empty] | return a double |

freturn | value → [empty] | return a float |

ireturn | value → [empty] | return an int |

lreturn | value → [empty] | return a long |

return | → [empty] | return void (nothing) |

Miscellaneous

| Opcode | Stack: [before]→[after] | Description |

|---|---|---|

monitorenter | objectref → | enter monitor for object ("grab the lock" – start of synchronized(objectref) section) |

monitorexit | objectref → | exit monitor for object ("release the lock" – end of synchronized(objectref) section) |

nop | [No change] | do nothing |

line

| [No change] | marker that represents a LineNumberTable entry for the given line, beginning at the first label preceding the marker |

label: | [No change] | marker in bytecode for the beginning of a block of instructions; referenced by jump and switch instructions |

JVM Execution: Stack + Locals

The Java Virtual Machine (JVM) executes method bytecode using a stack-based architecture. Each thread in a Java program has its own private JVM stack, which consists of stack frames. A new stack frame is created and pushed onto the stack every time a method is invoked. When the method completes (normally or via a thrown exception), its frame is popped and discarded. Each stack frame represents the state of a single method invocation and contains:

- Local Variables: An array of variables holding primitive and reference types. Contents are stored and accessed in the array via the variable's index (Recaf's assembler will use the name of variables rather than its index).

- Operand Stack: A Last-In-First-Out (LIFO) structure used as temporary space for intermediate values during computations.

The JVM also maintains a program counter (PC) per thread, which points to the current bytecode instruction being executed in the method. The PC advances sequentially as instructions are processed, unless modified by control flow instructions.

Execution Flow Overview

- When a method is invoked:

- A new frame is created for the invoked method.

- The caller pushes copies of the argument values (primitive values or copies of object references) onto the invoked method's operand stack.

- Execution in the caller is paused at the instruction immediately after the method invocation, ready to resume once the invoked method completes its own execution and (if applicable) pushes a return value onto the caller's operand stack.

- Instructions execute one by one:

- Load constants or variables → push to the operand stack.

- Store result → pop from the stack to a variable.

- Arithmetic/logic → pop operands, compute, push result.

- Control flow → conditionally jump to a label.

- Invoke methods → see above description of method invocation.

- On return:

- The frame is popped, and the value on top of the stack (if any is present) is pushed to the caller's operand stack.

Examples

Local variables and arithmetic

// int two = 2;

iconst_2

istore two

// int ten = 10;

bipush 10

istore ten

// int result = 0;

iconst_0

istore result

// result = ten * ten;

iload ten

dup

imul

istore result

// result -= 98;

iinc result -98

// result -= (int) Math.pow(result, 6);

iload result

iload result

i2d

ldc 6D

invokestatic java/lang/Math.pow (DD)D

d2i

isub

istore result

// return result;

iload result

ireturn

Control flow handling of a for loop

// int sum = 0;

iconst_0

istore sum

// int i = 0;

iconst_0

istore i

// Start of for loop

FOR:

// if (i >= 0) break;

iload i

bipush 100

if_icmpge EXIT

// sum += i;

iload sum

iload i

iadd

istore sum

// i++;

iinc i 1

goto FOR

// End of for loop

EXIT:

// return sum;

iload sum

ireturn

Editing

Recaf offers two ways of editing classes. Recompiling decompiled code and reassembling disassembled code. Each has their own benefits, but generally you should use the assembler approach when possible. You can find out more about each approach in the following pages in this section.

Recompiling

Stub. And no, you cannot ignore errors.

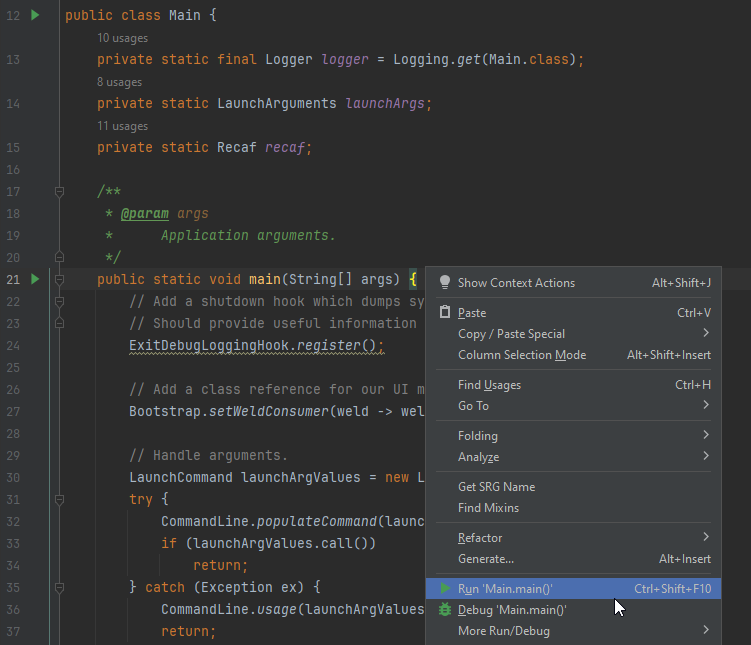

Assembler

The assembler lets you edit the bytecode of Java classes in a low level format. It should be used whenever possible as opposed to recompiling decompiled code. Using the assembler is comparable to a surgeon using a scalpel, verses recompiling which is comparable to them using a sledgehammer for the same operation.

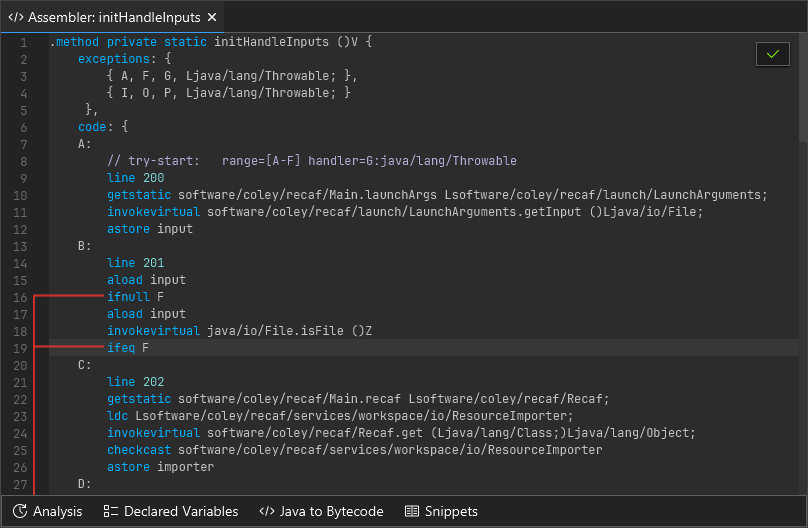

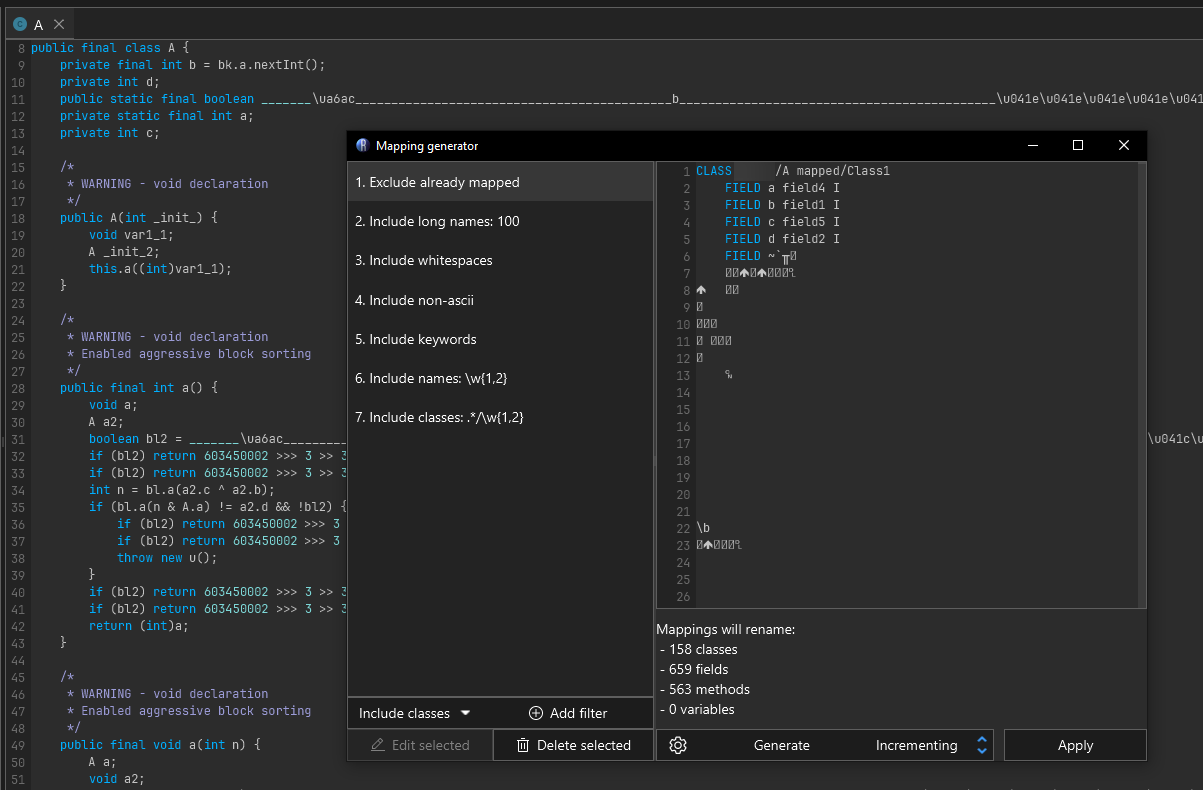

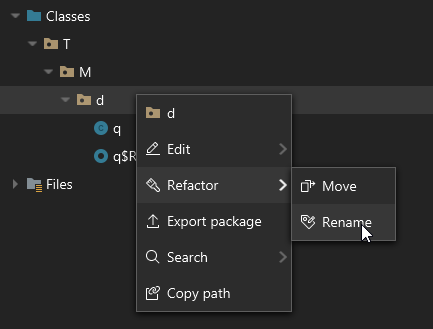

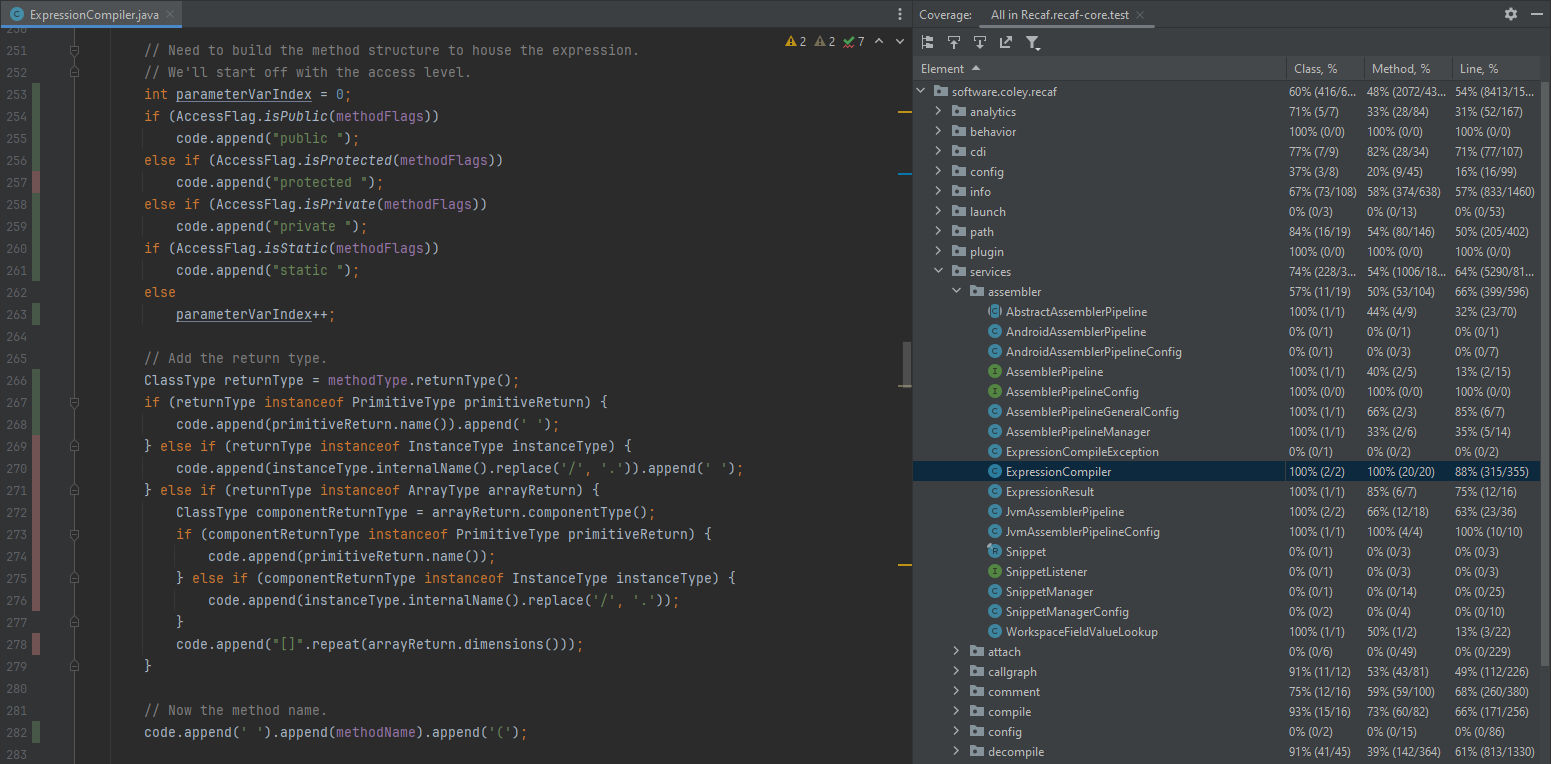

A method open in the assembler, showing various method attributes and instructions.

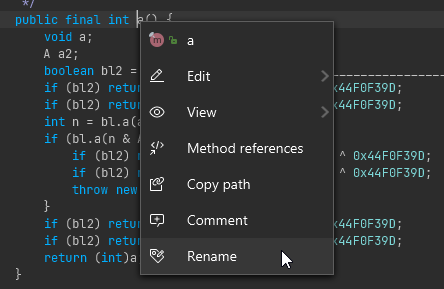

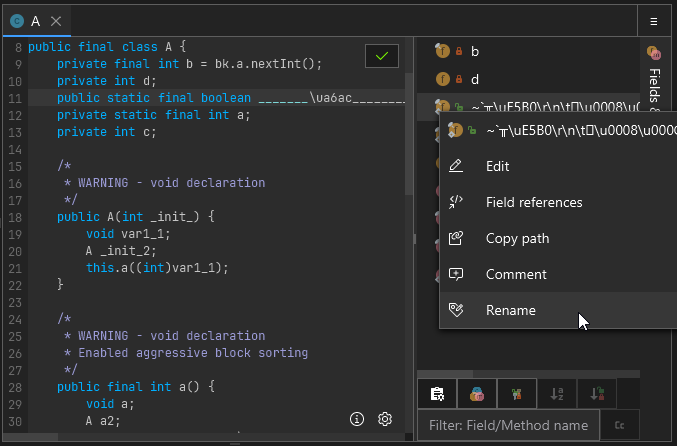

How do I open the assembler?

The assembler for any class, field, or method can be accessed by right clicking on the name of the class, field, or method and selecting "Edit > Edit in assembler". If for some reason right clicking does not work (which can occur when the context parser cannot understand obfuscated code) you can also right click on items in the "Fields & Methods" tab shown on the right hand side of any open class.

A short animation showing how to open the assembler.

Examples of where to click:

// "Hello" will open the class assembler

// |

// V

class Hello {

// "message" will open the field assembler

// |

// V

String message = "hi";

// "foo" will open the method assembler

// |

// V

void foo() {

System.out.println(this.message);

}

}

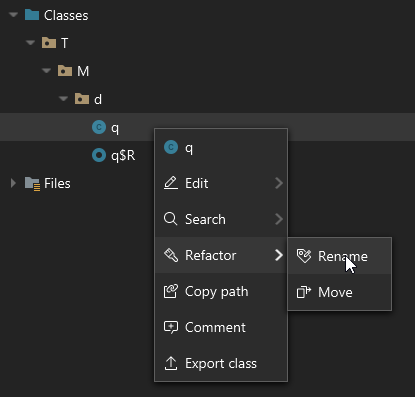

Additionally, you can right click on the class in the workspace explorer (which is where the tree of classes are on the left) to open the class level assembler.

I don't know much about bytecode, what now?

Ideally you can learn just enough to get by. Here are some relevant pages covering the basics of Java bytecode:

- JVM Bytecode Instructions: A list of all the JVM bytecode instructions and what they do.

- JVM Execution: Stack + Locals: A brief overview of how methods are executed, showing how the stack and local variable slots operate.

After you read over these pages the additional tools and features offered by the assembler should be able to carry you the rest of the way to making your desired changes.

Assembler Features

Java to Bytecode

You can write snippets of Java code and the Java to Bytecode tool will generate the equivalent bytecode. It uses the standard javac compiler behind the scenes, so whatever version of Java you run Recaf with dictates what features you have access to. But this means as long as you can fit your snippet into a continous block of code (no separate method definitions) it'll be supported here. Though, there are other shortcuts included like the ability to add import statements to the top.

Using the 'Java to Bytecode' feature to implement a toString() method.

The editor is tied to whatever context the assembler is open with. For instance, if you open the assembler on a method that returns String (or any Object type) it will expect that your snippet of Java code also eventually returns a String (You can ignore it and keep the default return null if desired).

Another benefit of this context-sensitive compiler is that you can access information in that context. You will always have access to the current class's fields and methods. But when you open a method in the assembler you will also have access to the parameters and defined local variables of the method.

For instance, consider this method:

double combine(double base, double power, double extra) {

double tmp = Math.pow(base, power);

return tmp + extra;

}

In this context you not only have access to the parameters base, power, and extra, but you also can access tmp in the Java to Bytecode tool. This means you could write something like return tmp - extra.

Snippets

If you find yourself regularly using the Java to Bytecode feature for the same kind of operation you may want to keep a copy of the results as a snippet. Snippets are just segments of code that you find useful for copy-pasting later.

Using the 'Snippets' feature to get the code for a simple `println`.

Recaf comes with a few example snippets. They have comments in them outlining what the original source code equivalent is and then the bytecode that corresponds to that source:

for (int i = 0; i < 10; i++) someMethod();while (i >= 0) { someMethod(); i--; }if (b) whenTrue(); else whenFalse();System.out.println("Hello");System.out.printf("hello %s\n", name);

When you create your own snippet you are prompted to give it a name and description. Afterwards you can type out our desired code snippet in the editor and then press the save button to keep it.

Analysis of stack/locals

While it is encouraged to read the JVM Bytecode Instructions and execution pages, mentally keeping track of how things move around on the stack and are stored in local variables can be tedious. The Analysis will do all of this for you. Contents of easily computed values (primitives and Strings) will also show their current values based on where your caret/text position is at within the method assembler.

Using the 'Analysis' feature to observe how instructions work.

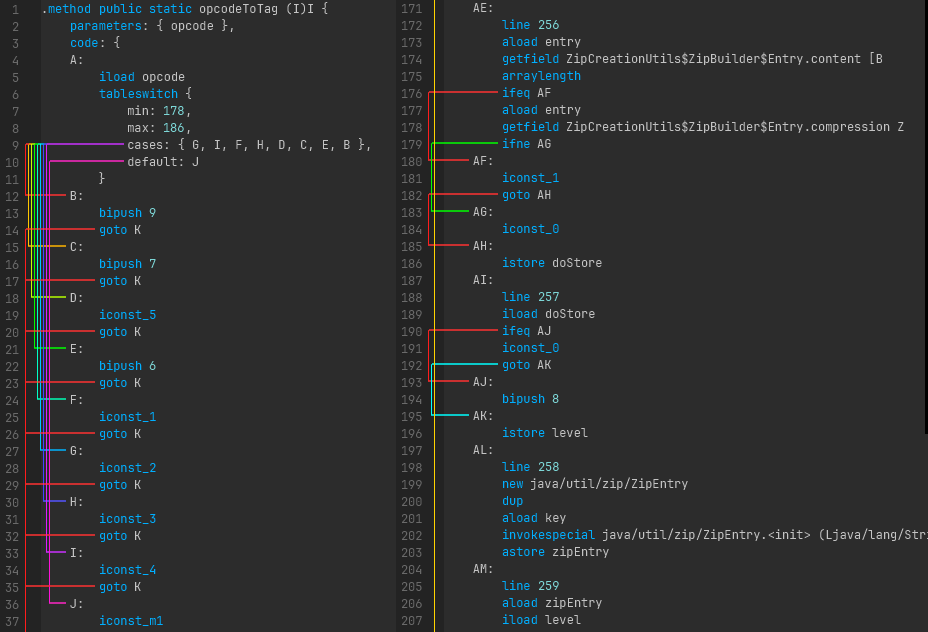

Control flow lines

Some instructions change the control flow of program exection. Remembering what label a jump goes to and scrolling to it (or using Control + F to find it) is tedious. Instead, when you click on any label or any instruction that potentially manipulates control flow you will see where those instructions will lead to. To disambiguate which jumps go where, any time there are multiple control flow lines next to one another they will use a distinct color. Ihe color selection is based on a hue rotation, so something like a large switch statement will generate a rainbow.

A series of lines between instructions and referenced labels.

Tab completion

The names of instructions and field/method references can be tab completed.

A short animation showing how tab completion can be used to make writing bytecode faster.

Obfuscation

If you're using Recaf to inspect suspected malware, or closed source software, you are more than likely going to encounter obfuscation in some form or another.

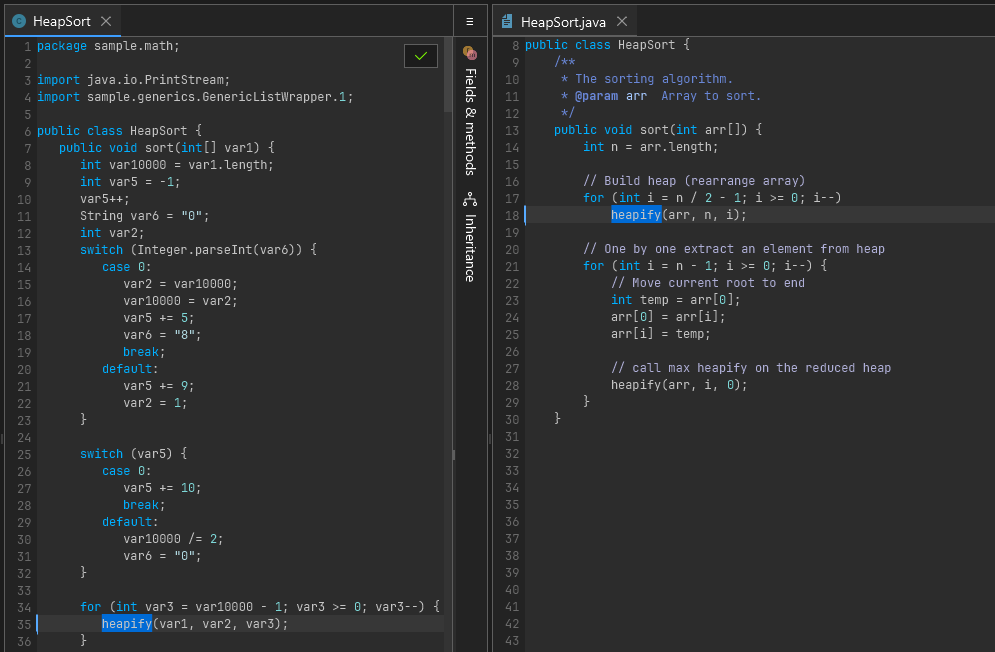

An obfuscated HeapSort algorithm with opaque control flow

What is obfuscation?

Simply put, obfuscation is anything that makes it harder to reverse engineer the logic of an application.

Why do people obfuscate their software?

It depends on who is developing the software and the intended purpose of the software. There are plenty of legitimate reasons to obfuscate software, and also plenty of illegitimate ones.

- Decompiling Java classes with modern decompilers is trivial and generally very accurate. Obfuscation makes it more difficult to recover source code that is easily copy-pastable. It is not foolproof but deters many potential reverse engineers with limited experience.

- A majority of a Java class file is often its constant pool, which includes all the names of referenced classes, fields, and methods. At runtime it is very rare that you need to have this information kept in a meaningful manner (unless the application uses reflection). Some obfuscation can remove or compress this data by renaming these symbols to shorter values, often a single character or two. ProGuard is a great example of a tool that focuses on this direction of obfuscation.

- Some developers assume that obfuscating their software makes it more secure. There can be some truth to this but only in the manner that it slows down bad actors rather than outright preventing them from tampering with the application. If your application has to run on a user's device, that user can, with enough time and knowledge, circumvent any local security measures.

- Malware developers will heavily obfuscate their code to hide malicious behavior. This makes sense at first, but at the same time just brings more suspicion to the application as it is then heavily obfuscated. The main reason why they would do this is to bypass any scan-time detections of anti-malware programs looking for known malware signatures. Again, its a double-edged sword because sometimes anti-malware vendors end up making signatures for the obfuscation itself which leads to any use of the specific obfuscation strategy being flagged even if the application being obfuscated is not malicious in nature.

What can Recaf do about obfuscation?

See the chapters under the deobfuscation section.

Jar / Zip Obfuscation

Jar files are Zip files with the only major difference being the expectation of special paths like contents in the META-INF folder and location of .class files matching the class's name. This is at least the only major difference on paper. In practice the JVM has multiple ways it will parse Jar and Zip files. Here are a few of them:

java -jar example.jarZipFile zip = new ZipFile(new File("example.jar"));ZipInputStream zis = new ZipInputStream(new FileInputStream(new File("example.jar")));FileSystem zipFs = FileSystems.newFileSystem(Paths.get("example.jar"))

Each of these cases uses a different backend for parsing Jar/Zip files. This allows obfuscators to abuse the Zip file format in different ways for each case. To illustrate the problem lets first take a very high-level look at how a Zip file is usually structured.

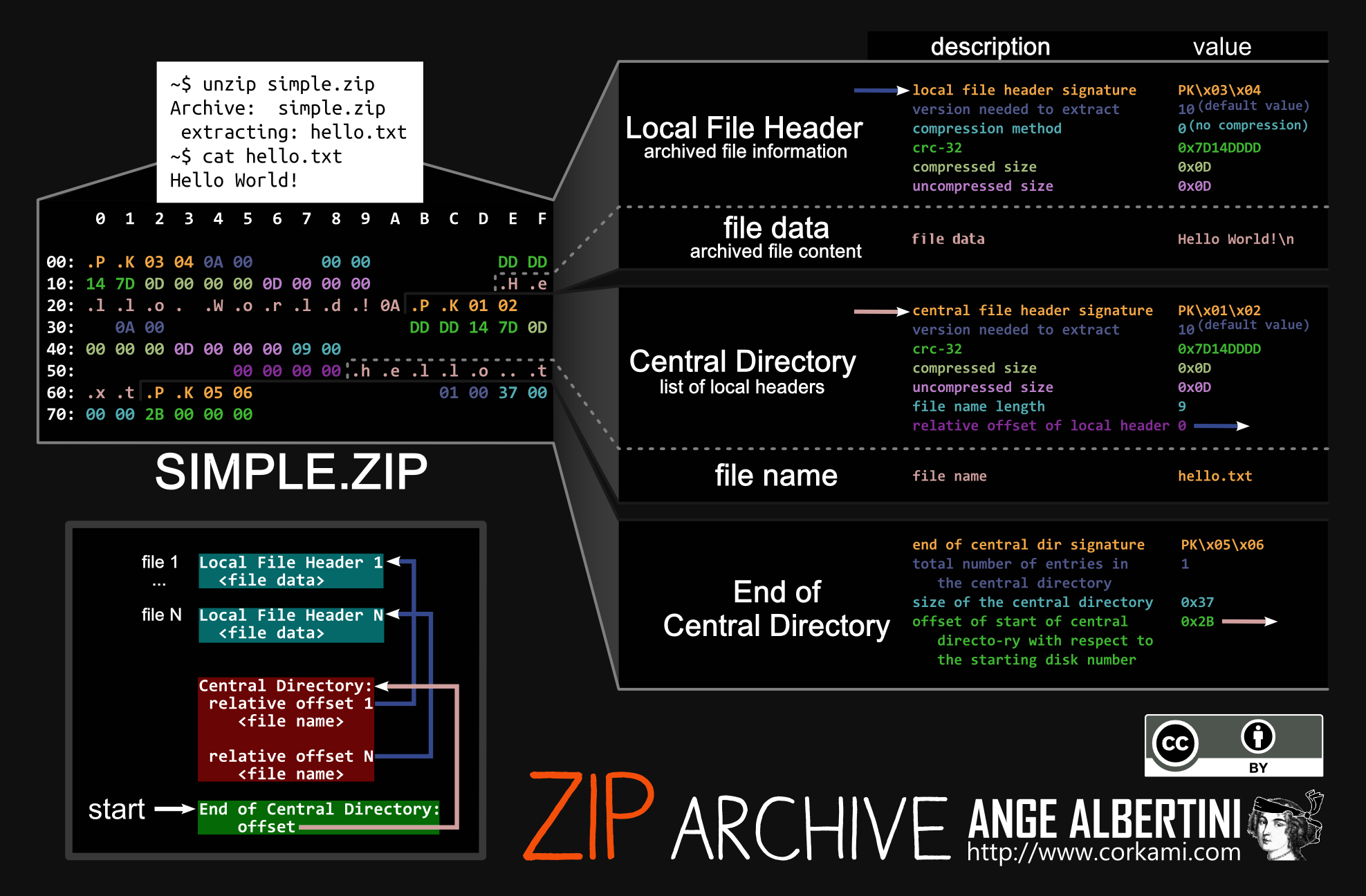

A diagram by Ange Albertini breaking down a small Zip file containing a single text file.

Zip files generally begin with 50 4B 03 04 (Ascii PK..) since this is the header signature of a "Local File Header". These local file headers are where the contents of compressed files in the zip are defined (See "file data"). Going forward in the file you will see the "Central Directory" which has almost identical values to the "Local File Header". Then last section is the "End of Central Directory". Central directories, as described in the graphic, list the available local file headers that are present further to the start of a file. The end of the central directory outlines where these central directory entries begin in the file, and how many of them there are. Zip files are intended to be read backwards. You read the end of the central directory, scan backwards to collect the central directories, and that should give you all the information you need to understand what is contained in the Zip file.

Central directories and local file headers have a lot of the same fields. As described before, the central directories are all you need to understand what is in the Zip file, so the local file header values should match. However in the realm of obfuscation the intended purposes of these structures are thrown out of the window.

Examples of Jar / Zip obfuscation

For the following cases consider experimenting on your own with the following samples:

- hello.jar

- hello-trailing-slash.jar

- hello-trailing-slash-0-length-locals.jar

- hello-only-lfh.jar

- hello-deceptive.jar

To discover more about the structural differences between these files, you should use a hex editor tool like ImHex using the zip pattern. Be aware, the ImHex zip pattern will fail if there is no "End of Central Directory" record since it works backwards from there. You can work around this limitation with a hack something like this:

// Replace the last line of the ImHex zip pattern with this

fn getName(u32 val) {

if (std::mem::read_unsigned(val, 4, std::mem::Endian::Little) == 0x2014b50) {

return "CentralDirectoryFileHeader";

} else if (std::mem::read_unsigned(val, 4, std::mem::Endian::Little) == 0x6054b50) {

return "EndOfCentralDirectory";

} else if (std::mem::read_unsigned(val, 4, std::mem::Endian::Little) == 0x4034b50) {

return "LocalFileHeader";

}

return "unknown";

};

struct Wrapper {

if (std::mem::read_unsigned(addressof(this), 4, std::mem::Endian::Little) == 0x2014b50) {

CentralDirectoryFileHeader cen;

} else if (std::mem::read_unsigned(addressof(this), 4, std::mem::Endian::Little) == 0x6054b50) {

EndOfCentralDirectory eocd;

} else if (std::mem::read_unsigned(addressof(this), 4, std::mem::Endian::Little) == 0x4034b50) {

LocalFileHeader loc;

}

} [[name(getName(addressof(this)))]];

// Only top-level structs can be mapped, so we make an array of wrappers,

// which can mutate to evaulate to each respective kind of Zip structure.

// This approach has a number of problems but works well enough for the provided examples above.

Wrapper entries[while(!std::mem::eof())] @ 0x00 [[inline]];

Obfuscated java -jar cases

When you use java -jar example.jar to run an application, the JVM is using libzip. This implementation reads the Jar file backwards, as intended by the Zip file format. When a "Central Directory" defines a value that is also replicated by the "Local File Header", the "Central Directory" version is always preferred, meaning the "Local File Header" can be filled with mostly junk. This however is not strictly the case for the "compressed size" fields. Neither the "Local File Header" or "Central Directory"* are trusted for the size. The JVM libzip implementation scans from the where the file data is supposed to begin, up to the PK.. header of the next available Zip entry. This means both the local and central values can be something like 0 which makes the file appear empty, but executes fine at runtime. Also, if a class's file name ends with a trailing / that gets silently dropped. This fools most zip tools into displaying the file (because it has all the bytes of the class file) as a folder instead.

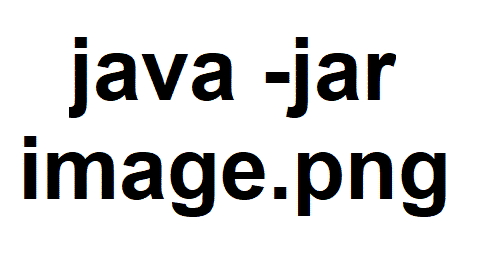

So to summarize:

- Class files can have contradictory data between the "Local File Header" and "Central Directory" entries

- Class files can have bogus values for their "(un)compressed size" fields

- Class files can have bogus values for their CRC fields

- Class files can appear as directories by ending their zip file entry path with

/ - Jar files can have leading junk bytes in front of them, such as an image or executable making it a polygot

Download this image and run java -jar hello.png

Obfuscated ZipFile cases

The implementation of ZipFile used to be largely implemented by libzip back in Java 8, but has rewritten in Java 9 to be implemented fully in Java per JDK-8145260. It retains most of the same behaviors due to the nature of this rewrite being a port, but has a few minor differences.

- Trailing slashes in class file path names results in

ZipEntry.isDirectory()beingtruewhich will trick many applications into skipping them.

Obfuscated ZipInputStream cases

Streaming a Jar/Zip file will read from the front. This adds the following conditions:

-

The file cannot have any leading junk bytes. It must start with a "Local File Header".

- This is actually abusable because any "Central Directory" or "End of Central Directory" entries can be excluded. Many applications will not open a Zip file if it does not have these records. But if a Java application is written to use

ZipInputStreamthese "invalid" files are perfectly fine.

- This is actually abusable because any "Central Directory" or "End of Central Directory" entries can be excluded. Many applications will not open a Zip file if it does not have these records. But if a Java application is written to use

-

The fields of the "Local File Header" must be correct, for instance the "(un)compressed size" and "CRC" values must be valid for the data held by the "file data" field.

Obfuscated FileSystem cases

Passing a Path to a Jar/Zip file when creating a FileSystem leads to yet another custom implementation of Zip file parsing. However, it is mostly the same as ZipFile in terms of behavior. The main key difference is that it skips entries where ZipEntry.isDirectory() is true which can lead to skipping over valid class files.

Parsing Jar / Zip files with Recaf

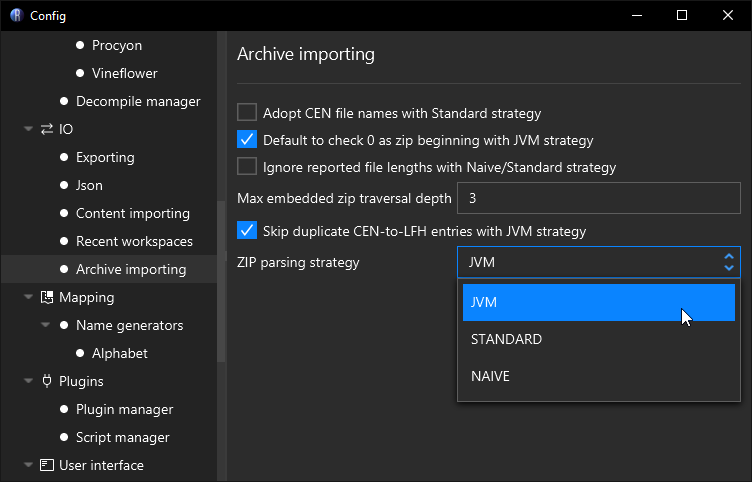

Recaf by default will read Jar/Zip files as if you are reading them via java -jar but this behavior is configurable in the Archive importing section of the config window. The most important value to change is the parsing strategy where:

- "JVM " mirrors

java -jarmechanics - "Standard" mirrors

ZipFilemechanics - but scanning forward from the start of the file rather than backwards - "Naïve" mirrors

ZipInputStreammechanics

Recaf's archive importing config allows you to change how Jar/Zip files are read

| Option | Description |

|---|---|

| Adopt CEN file names with Standard Strategy | Standard strategy uses names in the "Local File Header" by default, enabling this will use names specified in the "Central Directory" instead. |

| Default to check 0 as zip beginning with JVM strategy | Enabling this allows the JVM strategy to skip some edge case checks when dealing with Jar/Zip files that have multiple Jar/Zip files concatenated together, or embedded in one another. This is enabled by default for a slight performance gain. |

| Ignore reported file lengths with Naïve/Standard strategy | Enabling this will change how the "file data" of "Local File Header" entries are read with Naïve and Standard strategies. By default they trust the "compressed size" field. This changes them to mirror the JVM strategy, where the lengths are interpreted by scanning to the next zip file entry in the file. |

| Max embedded zip traversal depth | Many applications will bundle other Jar/Zip files within themselves. Recaf will display the contents of embedded jars in the workspace tree in the "Embedded" category. It will continue to do this for the number of given levels here. The default value is 3 as most standard applications do not go beyond this level. |

| Skip duplicate CEN-to-LFG entries with JVM strategy | Some obfuscated Jar/Zip files will have multiple "Central Directory" entries point to the same "Local File Header" to make extraction tools extract the same file many times over. This option checks if a "Local File Header" has been parsed already and skips adding duplicate entries to the workspace when enabled. |

| Zip parsing strategy | As outlined above, the strategy selected determines which use case the Jar/Zip is intended to be read with. Most of the time you are generally going to want to stick with the JVM strategy, unless there are no "Central Directory" entries, which then you will need to use the Naïve strategy. |

Name Obfuscation

Obfuscation that changes the names of classes, fields, and methods is commonly referred to as name obfuscation, or identifier renaming. There are plenty of different ways to name things that cause reverse engineering to be more challenging.

Limitations of name obfuscation

JVMS 4.2 defines what characters are not allowed to appear in different kinds of names.

Class and interface names that appear in class file structures are always represented in a fully qualified form known as binary names. For historical reasons, the syntax of binary names that appear in class file structures differs from the syntax of binary names documented in JLS §13.1. In this internal form, the ASCII periods (

.) that normally separate the identifiers which make up the binary name are replaced by ASCII forward slashes (/). The identifiers themselves must be unqualified names.Names of methods, fields, local variables, and formal parameters are stored as unqualified names. An unqualified name must contain at least one Unicode code point and must not contain any of the ASCII characters

. ; [ /(that is, period or semicolon or left square bracket or forward slash).Method names are further constrained so that, with the exception of the special method names

<init>and<clinit>, they must not contain the ASCII characters<or>(that is, left angle bracket or right angle bracket).

Aside from these few restrictions, the sky is the limit.

Examples

The following name obfuscation strategies will target this basic data model class:

public class User {

private String username;

private int userId;

public User(String username, int userId) {

this.username = username;

this.userId = userId;

}

public void displayUserInfo() {

System.out.println("User: " + username + ", ID: " + userId);

}

public static void main(String[] args) {

User user = new User("Alice", 12345);

user.displayUserInfo();

}

}

Short & overloaded naming

Obfuscators like ProGuard will rename as many things as possible to the same short names. This has two main benefits.

- It makes it difficult to determine what is being referred to when looking at decompiler output since things are only referred to by name.

- It saves space in the constant pool, which makes the class file smaller. Instead of having five separate entries for

User,username,userId,user, anddisplayUserInfoyou now only have one entry fora.

// User --> a

public class a {

// username + userId --> a

// As long as the types of multiple fields are unique, they can share the same name

private String a;

private int a;

// Parameters & local variables can be named anything

// since they are debugger metadata not required for much else at runtime.

public a(String a, int a) {

// Because the types are unique, but names are shared its impossible to tell what is

// assigned to what here just by looking at decompiler output

this.a = a;

this.a = a;

}

public void a() {

// If you're lucky the decompiler will hint which field is referenced in ambiguous cases

// by casting to the field's type.

System.out.println("User: " + (String) a + ", ID: " + (int) a);

}

public static void main(String[] a) {

a a = new a("Alice", 12345);

a.a();

}

}

Reserved keyword naming

Identifiers can be mapped to reserved keywords such as primitives (int, float, etc), access modifiers (private, public, etc) and other language features such as switch, for, etc. This is generally annoying as it messes with syntax highlighting of tools and confuses Java source code parsers.

Note: In this case, all identifiers are given unique keywords, but the same principle as discussed before can be applied. You could very well name every identifier in the example

voidlike how the prior example named every identifiera.

public class void {

private String float;

private int int;

public void(String short, int byte) {

this.float = short;

this.int = byte;

}

public void long() {

System.out.println("User: " + float + ", ID: " + int);

}

public static void main(String[] private) {

void char = new void("Alice", 12345);

char.long();

}

}

I and L naming

The letters I and l in some font families look very similar. Some obfuscators take advantage of this by naming identifiers with a series of I and l in the hopes that all identifiers visually look identical. For instance:

IIlIIIlIIllIIlI

With a good font, these will be easily identifiable as separate names.

public class IIlII {

private String IlIIl;

private int lIIlI;

public IIlII(String IlIIl, int lIIlI) {

this.IlIIl = IlIIl;

this.lIIlI = lIIlI;

}

public void IIIlI() {

System.out.println("User: " + IlIIl + ", ID: " + lIIlI);

}

public static void main(String[] IIIII) {

IIlII llIll = new IIlII("Alice", 12345);

llIll.IIIlI();

}

}

Empty space naming

There are plenty of unicode letters that look like empty spaces. Combining several of these together will let an obfuscator make classes look largely empty.

public class {

private String ;

private int ;

public (String , int ) {

this. = ;

this. = ;

}

public void () {

System.out.println("User: " + + ", ID: " + );

}

public static void main(String[] ) {

= new ("Alice", 12345);

.();

}

}

Windows reserved naming

Name a class CON in any variation of capitalization on a Windows computer and see what happens.

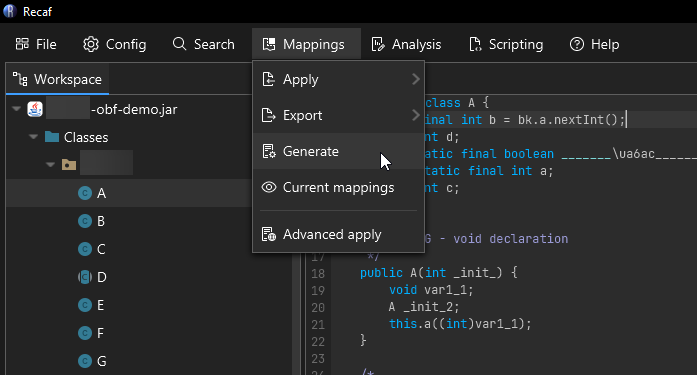

Cleaning up names with Recaf

See the following page: Mapping

Constant Obfuscation

Generally when you write an application, having sensitive data like an API key in the client source code is a bad idea... but sometimes it cannot be avoided. In such cases its a wise idea to use constant obfuscation, which transforms single values like numbers, strings, and other simple values into more obscure forms.

Example: Strings

Strings often contain information that can be used as landmarks in obfuscated code. For instance, you can search for skeleton.hurt in an obfuscated Minecraft jar and it will lead you to the EntitySkeleton.getHurtSound() method in older versions of the game. Normally, the names are obfuscated but it can be a starting point to begin mapping the obfuscated names back to clear names. To prevent this, any form of obfuscation on the string that breaks a simple search will suffice. Here are some examples.

Splitting the string into parts:

new StringBuilder("s").append("kel").append("eto").append("b.hu").append("rt").toString();

"s".concat("kel").concat("eto").concat("b.hu").concat("rt");

"\0.\1".replace("\0", "skeleton").replace("\1", "hurt");

Manipulating character values:

xor("ltzszkpq1wjmk", 0b1111);

static String xor(String in, int i) {

StringBuilder out = new StringBuilder();

for (char c : in.toCharArray())

out.append((char) (c ^ i));

return out.toString();

}

Breaking into an array or general construction via an array of bytes/characters:

new String(new byte[] {115, 107, 101, 108, 101, 116, 111, 110, 46, 104, 117, 114, 116});

Example: Primitives

Primitives like byte, short, char, int, and long generally don't have as much value for reverse engineers as Strings but it is still common to see these obfuscated. The floating point primitives float and double can be obfuscated but its generally rare to see this. Usually obfuscated primitives are done so locally within method code with a series of mathematical operations such as +, -, *, &, ~, ^, <<, >> and |, but there's nothing stopping you from being a little bit more creative than that.

int width = 800;

int height = 600;

// obfuscated

int width = (1600 >> 2) * (1 << 1);

int height = 18 + (((0b101010100 ^ 0b1100) << 2) % 197) * 3;

// also obfuscated

int width = new Random(411294766).nextInt(10000);

int height = -7 + ("sad".hashCode() & 0b1000000000) + ("cat".hashCode() >> 10);

Cleaning up constant obfuscation with Recaf

See the following page: Transformers

Flow Obfuscation

Flow obfuscation aims to make the logical execution order of the application more confusing.

Example: Opaque predicate

Before:

public void print() {

System.out.println("A");

}

After:

private static int j;

public void print() {

if (j != 0) System.out.println(j == 2 << 0x10 ? "B" : "A");

}

static {

j++;

}

Example: Switch

Before:

public void print() {

System.out.println("A");

}

After

@Override

public void print() {

PrintStream ps = System.out;

int i = -1;

i++;

String k = "0";

while (true) {

switch (Integer.parseInt(k)) {

case 0:

i += 7;

k = "28";

break;

case 7:

k = "A";

break;

default:

ps.println(k);

return;

}

}

}

Cleaning up flow obfuscation with Recaf

See the following page: Transformers

Reference Obfuscation

In Java there are several instructions related to reading & writing to fields, and several more for invoking methods. These instructions refer to symbols in the constant pool including the name of the class defining the field or method, then the name and type of the field or method. Generally we'll call these "references". A majority of a Java application's logic consists of references (as opposed to other things like math operations on primitives) and thus a majority of the information about what an application does can be understood by analyzing these references.

Naturally, obfuscation aims to prevent reverse engineers from understanding an application, so how can these references be obfuscated? Simple, by swapping out the references with ones containing less valuable information. One way to do this is by abusing the invokedynamic instruction. What does this "abuse" look like though? Lets take a look at a normal use-case first.

// Overcomplicated way to print "hi"

public class Demo {

public static void main(String[] args) {

exec(Demo::example);

}

// the code we want to run

static void example() {

System.out.println("hi");

}

// runs a runnable

static void exec(Runnable r) {

r.run();

}

}

Lets disassemble this class and see what it looks like:

.inner public static final {

name: Lookup,

inner: java/lang/invoke/MethodHandles$Lookup,

outer: java/lang/invoke/MethodHandles

}

.super java/lang/Object

.class public Demo {

.method public static main ([Ljava/lang/String;)V {

code: {

A:

// Creates a "Runnable" via "LambdaMetafactory" with a MethodHandle pointing to 'void example()'

// First '()V' before the handle is the 'samType' (SAM is an acronym for single-abstract-method)

// MethodHandle = { invokestatic, Demo.example, ()V }

// - Outlines the handle dispatch type (mirroring the invoke instruction opcodes)

// - Outlines where the method is defined (This Demo class)

// - Outlines the method signature (the name and type, example and ()V)

// Second '()V' after the handle is the 'instantiatedMethodType' must match 'samType' or have arguments with subtypes

// - Example: If you had 'samType = (Ljava/util/Collection;)V' you could use 'instantiatedMethodType = (Ljava/util/List;)V'

invokedynamic run ()Ljava/lang/Runnable; LambdaMetafactory.metafactory { ()V, { invokestatic, Demo.example, ()V }, ()V }

// Passes the "Runnable" as a normal parameter

invokestatic Demo.exec (Ljava/lang/Runnable;)V

return

B:

}

}

// The code we want to run

.method static example ()V {

code: {

A:

getstatic java/lang/System.out Ljava/io/PrintStream;

ldc "hi"

invokevirtual java/io/PrintStream.println (Ljava/lang/String;)V

return

B:

}

}

.method static exec (Ljava/lang/Runnable;)V {

parameters: { r },

code: {

A:

// Just run the passed runnable

aload r

invokeinterface java/lang/Runnable.run ()V

return

B:

}

}

}

As you can see in the main method, the invokedynamic instruction can be used to create a new Runnable that is implemented by calling example(). In the source form this comes as a static method reference Demo::example.

But what if we used a lambda instead of a method reference?

When we write exec(() -> example()); the idea still remains the same, but the compiler is now no longer aware that we are just calling example() and thus makes a new generated method to contain the lambda body.

.method public static main ([Ljava/lang/String;)V {

code: {

A:

// Creates a "Runnable" via "metafactory" with a methodhandle pointing to compiler-generated method housing the contents of our lambda body

// MethodHandle = { invokestatic, Demo.lambda$main$0, ()V }

// - lambda$main$0 is the name of the compiler-generated method that holds our lambda body instructions

invokedynamic run ()Ljava/lang/Runnable; LambdaMetafactory.metafactory { ()V, { invokestatic, Demo.lambda$main$0, ()V }, ()V }

// Passes the "Runnable" as a normal parameter

invokestatic Demo.exec (Ljava/lang/Runnable;)V

return

B:

}

}

// The compiler auto-generates methods for each lambda you define in a source file.

// These generated methods are marked as 'synthetic' to denote the compiler made these.

.method private static synthetic lambda$main$0 ()V {

code: {

A:

invokestatic software/Demo.example ()V

return

B:

}

}

You may be wondering what is this LambdaMetafactory? You can read the full JavaDocs on it here but the gist is that you give it a MethodHandle and a descriptor like ()V and it will implement it the desired functional type provided as a return value such as java/lang/Runnable. Different methods in the class serve different purposes, but each that yields a Callsite is a method usable in the invokedynamic instruction. These methods are called "bootstrap methods". This is a core offering of the Java language, and thus most decompilers are designed to understand this class's purpose. Thus, if you used a modern decompiler with this class it would spit out the Demo::example or () -> example() based on whichever one was used.

Ok but how does an obfuscator make use of any of this if decompilers are so smart?

Compiling a lambda in Java source code will generate an invokedynamic using a bootstrap method specified in LambdaMetafactory but there is nothing stopping an obfuscator from creating its own bootstrap method. In fact, an obfuscator doesn't even need to pass a MethodHandle if it controls the the bootstrap method implementation. As an example, consider we make this change to the main method:

invokedynamic run ()Ljava/lang/Runnable; MyBootstrap.find { "lookup-key" }

We can implement MyObfuscator.find like this:

public class MyBootstrap {

public static ConstantCallSite find(

// These first 3 parameters are passed by the JVM and must always exist in a boostrap method definition.

MethodHandles.Lookup lookup,

String callerName,

MethodType callerType,

// Key value specified as the invokedynamic argument, ie "lookup-key" from the example above.

String key) {

ClassLoader loader = MyBootstrap.class.getClassLoader();

MethodHandle handle = switch (key) {

// Provide a case for each possible key we want to pass.

// Our example only has the one..

case "lookup-key" -> {

// Create a MethodHandle by using the passed lookup then find our "Demo.example ()V"

MethodType methodType = MethodType.fromMethodDescriptorString("()V", loader);

yield lookup.findStatic(Demo.class, "example", methodType);

}

default -> null;

};

// Wrap the handle in a CallSite and we are done!

return new ConstantCallSite(handle.asType(callerType));

}

}

We are no longer using LambdaMetafactory and use a custom implementation that maps a passed String to a MethodHandle. There is no easy way to represent this new scheme as it no longer maps to a source code construct, making this class impossible to decompile back to its original form. Some will try their best to show the intention of the code, but are at the end of the day still wrong. Some examples:

- CFR:

MyBootstrap.find("lookup-key", this); - Procyon:

ProcyonInvokeDynamicHelper_1.invoke(this); - FernFlower:

MyBootstrap.find<"lookup-key">(this);

Another fun fact is that despite the implication of the name MethodType you can also apply this form of reference obfuscation to field accessors. The MethodHandle.Lookup has methods for field reading and writing. You can quite easily create an obfuscator that replaces all of these references with a custom lookup. There's not too much of a concern about performance if you do this since these ConstantCallSite values are resolved once, and then cached.

Can Recaf automatically restore the original form?

It depends, but for now the short answer is going to be "not out of the box". The problem is that there are infinite ways to create a bootstrap method, so it becomes very hard to analyze obfuscated applications in a generic way that lets us determine the original intention. We could create specific transformers that target individual patterns used by specific obfuscators, but we are then in a game of cat-and-mouse. One small change in a new version of that specific obfuscator would break our transformer, and then we also need to support multiple versions of that transformer. This would be a massive time sink for us and also create lots of not so great duplicate code. For this reason, creating transformers for specific obfuscators is a process left to the you and the rest of the Recaf community (Plugins can register their own transformers via TransformationManager). Recaf will provide a host of generic obfuscation transformers and additional services for you to build off of though when creating your own transformer.

Loader Obfuscation

Loaders are mechanisms which take binary input data and transform it into loadable classes at runtime. This prevents easy analysis as class files are kept in an arbitrary format and are not immediately viewable.

Example

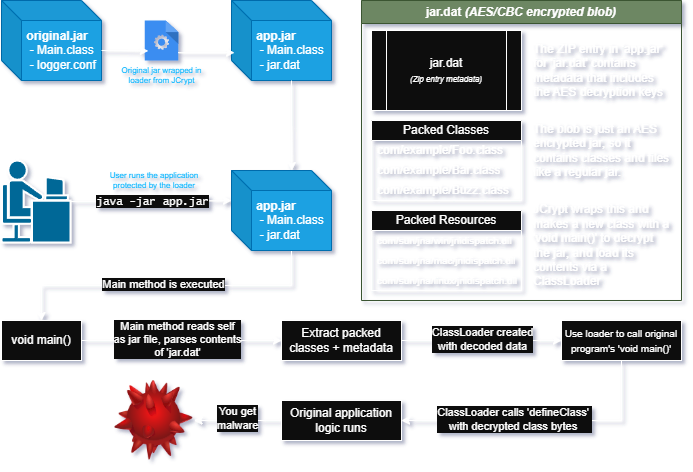

JCrypt is a rather straight forward implementation of loader obfuscation. The contents that will be executed by the runtime are found in the stub directory. Here's an overview of how it works:

A diagram outlining the control flow of how JCrypt operates.

Dumping loaders statically

Recaf will not automatically unpack the loader for you. There are too many patterns to match to make this a fully automated process, but the general approach is simple.

- Find where the packed data is loaded.

- Usually you will see a call to

getResource("jar.dat")orgetResourceAsStream("jar.dat") - Or finding the current running jar and reading its contents like with JCrypt. Generally done via

getProtectionDomain().getCodeSource().getLocation()on a class in the loader which provides the URI to where the class was loaded from.

- Usually you will see a call to

- Find where the packed data is decrypted.

- Find where the decrypted data is used in a

ClassLoader- Classes in this context are generally loaded via

defineClass(name, bytes, 0, bytes.length)

- Classes in this context are generally loaded via

- Copy the code necessary to load the packed data, decrypt it, then instead of using

defineClasswrite the contents to disk.- Run your modified version that saves the decrypted classes and resources to disk.

Once you've written the decrypted classes to disk, you can open them back up in Recaf.

Dumping loaders dynamically

The other option to dump loader contents is to run the application and then use instrumentation or JVMTI to dump the loaded classes. Some considerations before you take this approach:

- You will generally do this in a Virtual Machine and not your host system.

- When you dump the classes, you are only able to dump what is currently loaded. If there are unloaded classes in the packed data that have not been referenced yet, those will not be included in the dumped output.

Example dumping agents:

- Java Instrumentation based agent

- C++ JVMTI based agent (Requires building it yourself to target your current machine architecture)

- Black magic JVMTI from Java-side (Requires building yourself, setting up usage in target VM to dump)

Class Parsing Library Exploitation

How do you prevent a reverse engineer from looking at your class file? By making a really janky class that violates the specification and/or abuses very niche but supported cases. Most Java class file parsers take the specification at face value and assume that inputs are generally "normal" classes that have been freshly compiled by javac. For instance you probably expect the Code attribute to only appear on methods, right? Well, there's nothing stopping you from putting a Code attribute on a field. And when its on a field, the JVM at runtime will ignore it. But a Java class parser will be expected to parse the class in full, so it will try to read the Code attribute on the field. If this Code attribute has bogus values in it, the class parser can easily be tricked into reading outside of the class file bounds, or casting to an illegal type.

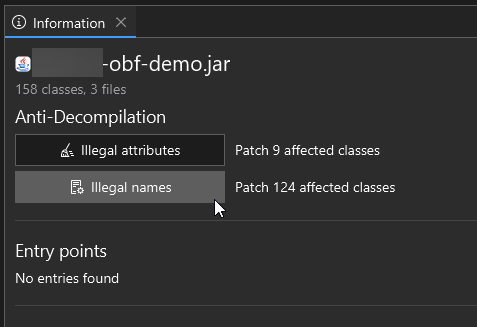

What does Recaf do?

Recaf automatically patches these classes when loading classes into the workspace. We wrote CafeDude (CAFED00D) to read janky classes like this. Its not intended to be used for high level operations like ASM or Javassist are. Instead, its primary purpose is transforming these janky classes into safe classes that can be read by the other high level Java class parsing libraries.

If CafeDude is incapable of patching a class file, Recaf will include the file under the "Files" tree in the workspace explorer. Sometimes this occurs because a file may look like a class at first glance, but is not actually valid. Please report any cases that you believe should be patchable where CafeDude fails and we will take a look.

Java to Native Obfuscation

Reverse engineering Java is easier than reverse engineering native executables in most cases.